Semantic Work Needs Its Figma Moment

How design democratized expertise—and why semantic infrastructure should do the same

By now you know you need semantic infrastructure. Maybe you’ve read the Shopify case study, my recent post about getting started, or you’ve been debating internally for over a year whether you need a “semantic layer” (and if so—what exactly that should look like?!).

You understand why AI depends on semantics. You’re starting to have conversations with your team to talk about how to get started.

Which means, soon you’ll be faced with a disappointing fact: even if you get the buy-in, the tools and process are insufficient for solving this problem.

While plenty of advocates have been talking about semantics, world models, data models, and knowledge graphs—the tooling is really lacking. From my point of view as a data product manager, two challenges emerge for teams who want to get started in this space—collaboration fragmentation & the expertise gap.

First challenge: Collaboration Fragmentation

To build up knowledge you need to get a very diverse set of people collaborating. The knowledge is created and stored in a very distributed fashion and changing that requires a lot of collaboration. From non-technical business SMEs to data professionals to governance folks— and there’s just not a great shared interface to build knowledge collaboratively, in structured ways, that make sense for so many personas.

Business SMEs are working out of domain systems, Microsoft 365 suite, Confluence, etc.

Data engineers are modeling in whiteboard tools like Miro, dedicated software like Erwin, or just on a physical whiteboard in their office.

The governance team is managing a data catalog and trying to figure out how to keep it up to date, but it’s no one’s primary system of record, so it’s tricky.

All across the business people are creating knowledge and defining models, but the artifacts are siloed. Disconnected, inconsistent, and certainly not a cohesive managed ecosystem. This isn't just inconvenient—it's actively harmful. The business SME defines 'customer' one way in Confluence. The data engineer models it differently in the warehouse. The governance team tries to reconcile them in the data catalog that nobody uses as their primary tool.

Everyone works so hard to create clarity and yet we still have semantic drift and confusion. The knowledge curation is happening—it's just happening in silos that can't talk to each other."

Second challenge: Expertise Gap

Even if you could get everyone in the same room (or tool), semantic work—building taxonomies, knowledge graphs, ontologies—requires expertise your organization doesn’t have and can’t easily acquire. This expertise genuinely matters—ontologists bring years of training in knowledge representation, logical consistency, and semantic modeling that you can’t just pick up from a tutorial. But you likely can’t hire one full time or on contract at the early stages.

Right now, I see pockets of innovation and investment in these systems and expertise — at big tech companies and in industries like Biotech and E-Commerce, where teams have the resources and business case to invest in semantic experts. The rest of the world? Crickets.

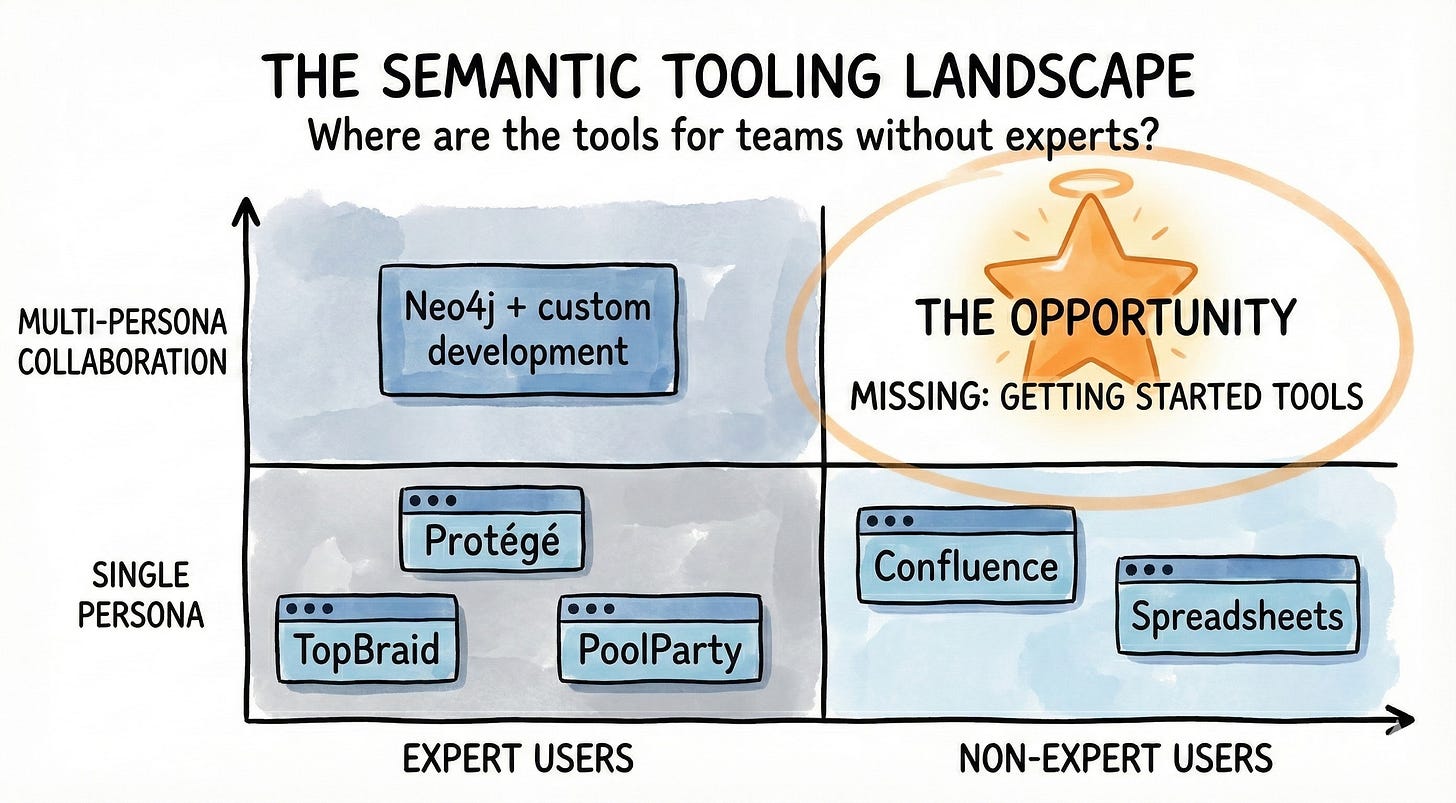

Tools like Protégé & Pool Party have very applicable features, but they were built for expert ontologists. Which means there’s no entry point for data teams who need to start building semantic infrastructure before they can justify hiring more niche expertise.

Other tools that offer software solutions defining semantics (Snowflake semantic views, BI semantic layers, etc) are incomplete. They aren’t feature rich enough to help all the personas involved and many of them are too siloed. They’ll only provide solutions in one part of your stack, instead of across the entire platform. So that’s challenge two - fully featured tools don’t support non-expert users and tools geared at the team that already exists aren’t fully featured enough.

Meanwhile, AI is making semantic infrastructure MORE critical, not less. Which means this tooling gap isn’t just inconvenient—it’s becoming a genuine bottleneck for the industry.

What’s interesting is that design faced the exact same challenge fifteen years ago and we could learn a lot from their journey. Their solution wasn’t to just train more designers or give up on quality. The solution was to build systems that multiplied what one designer could accomplish. Through design systems & collaborative design tooling that brought related experts and designers together.

Faced with a similar expertise and tooling gap — and rapidly rising demand from AIs need for grounding in context—semantic work needs its design system moment.

What would that look like? Tools that help teams start building before they can hire an ontologist—with guardrails against major mistakes. And collaboration workflows that let experts guide many contributors rather than gatekeep every decision. I think we can take inspiration and learnings from the product design community’s evolution in practice and tooling over the past 15 years.

A Parallel Success Story: How Design Democratized Itself Without Undermining Expertise

Let’s rewind a bit to about a decade and a half ago. In the early 2010s, the web exploded and suddenly designers were needed across even more interfaces than before (web, mobile, tablet, different screen sizes, different user personas!) creating a massive challenge for the industry.

The solution they came up with—design systems that help scale expertise & tooling that supports design working with their cross functional partners.

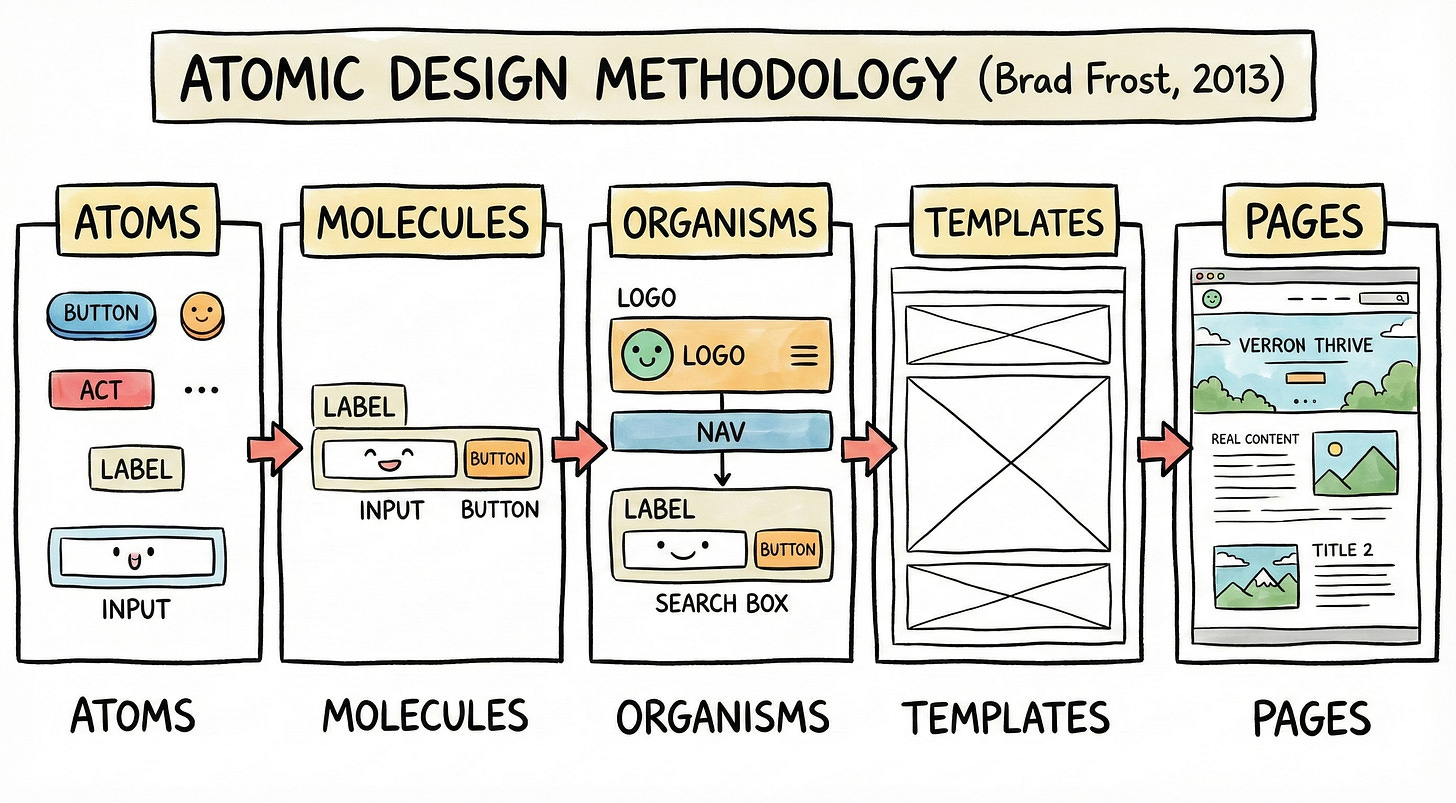

A pivotal moment seems to have been in 2013, when Brad Frost coined the “Atomic Design” methodology. This framework applied the metaphor of chemistry to illustrate how design could be modularized, standardized, and scaled within a product:

Atoms - smallest building blocks - buttons, labels, basic elements

Molecules - groups of atoms that work together - a search box = label + input + button

Organisms - complex components made up of of molecules - a header = logo + nav + search

Templates - page level layouts with structure (wireframe-ish)

Pages - specific instances of templates with content - showing what users actually see

The insight was to build small to large & when you update a component in one place it updates everywhere. This gave designers and developers a shared vocabulary for communicating & businesses began to build design systems (e.g. 2015 - Salesforce Lightning Design System, Google Material Design System).

Around the same time Figma launched—building a product that enabled building off shared component libraries & expanded collaboration between designers and their teams .

Designers didn’t stop being important, but they could stop being bottlenecks because the team could make simple decisions without them. There would never be enough designers for every team/feature to utilize their expertise, but the systems encoded design decisions into reusable components. Design systems include component libraries, patterns, guidelines and constraints that encode that expert judgment.

Just like design systems scaled & democratized good design,

we need semantic systems that scale & democratize semantic design.

We need our scarce experts to become architects of the overall system &

not the implementers of every decision.

What’s of note here is that design systems themselves are an investment. Roles are dedicated to building/maintaining them. I imagine at many companies they didn’t start that way. You probably had a principal designer leading a collaborative effort toward unifying components in the early stages that eventually matured into a full blown system with dedicated resources.

The Current State: Tools Built for Experts, Not for Getting Started

The current tools being used to build semantics exist (Protege, Pool Party, TopBraid), but assume expert knowledge and hands-on involvement. They provide power and flexibility, but require the expert(s) to touch every decision.

Companies who build knowledge graphs get into custom software built on top of GraphDB, Neo4J, etc.

All of this is just far too much for the team starting from nothing.

Like design before us - there are powerful tools that require experts, but no entry point for teams looking to just start building more structure and curation amid the chaotic current state.

Ideally we’d have a tool that supported diverse users, had some validation to prevent major structural errors or catch conflicts before the tech debt becomes too large. It could help harvest terms, and build version controlled vocabularies— supporting collaboration and preparation that provide value in the early stages of investment before the organization is ready for more complexity.

Conclusion

Right now, semantic work is happening across your organization—it’s just happening in a fragmented way that doesn’t scale. And the fact that it doesn’t scale is going to be a blocker for AI. Business teams define terms in Confluence, data engineers model relationships on whiteboards, governance maintains a catalog that's nobody's source of truth. Everyone thinks they're building knowledge, but they're actually building silos that can’t talk to each other.

When you realize your data platform needs consistent vocabularies and integration requires shared models—you discover the tools are incomplete. Catalogs that aren’t robust enough, data modeling tools that aren’t accessible to business SMEs, generalist tools that support information gathering but not enough control or connectivity, and of course powerful specialty tools that assume you have an expert ontologist on staff.

This is so similar to the impossible position design was in fifteen years ago. Demand exploded. Expertise was scarce. Quality mattered but couldn’t scale through hiring alone. Their answer wasn’t to give up on quality or train everyone to be expert designers. It was to build systems that encoded expert judgment into reusable components, guardrails, and patterns. One expert could architect a design system that guided hundreds of contributors toward good decisions. User friendly tools like Figma allowed companies to scale those design systems while supporting a broad array of user personas.

Semantic work needs that same evolution—tools that multiply what experts can accomplish. Validation that prevents you from building a taxonomy with circular hierarchies. Templates for common patterns like 'product belongs to category' that you can apply without reinventing the wheel. Collaborative interfaces where your business SME and data engineer can actually work together on defining 'customer' instead of doing it separately in Confluence and the warehouse. And honest guidance about when you're in over your head and need to bring in expertise (which you absolutely will).

This isn’t about lowering standards—it’s about making expertise accessible. It’s about letting scarce experts become architects of systems rather than implementers of every decision. And it works in everyone’s favor. Organizations get access to semantic capabilities they need right now. Experts get hired into organizations that are ready for them—teams that have hit the limits of DIY approaches but have enough foundational work in place that the expert isn't starting from absolute zero.

The gap is real. The demand is real. And it’s getting more urgent as AI makes semantic infrastructure shift from “nice to have” to “competitive differentiator.” If you’re building semantic tools, the getting-started use case is underserved and increasingly critical—please build us the tools we need so we don’t have to vibe code something mediocre from scratch. If you’re a semantic expert, think about how to package your knowledge for reuse—what patterns could be templated, what guardrails would prevent common mistakes, when to guide versus implement.

If you’re a data platform team stuck between needing semantic infrastructure and not having expertise, you’re not alone. This gap exists because the tooling hasn’t caught up to the need. But gaps like this are where innovation happens. Design systems didn't exist until the field demanded them. I'm hoping we're at that same inflection point for semantic work and tooling companies rise to the occasion.

Or someone can start learning ontological knowledge engineering.

Fully agreed, and what an extremely timely article!